The Entire VR Industry in One Little Email

The Daily Roundup is our comprehensive coverage of the VR industry wrapped up into one daily email, delivered directly to your inbox.

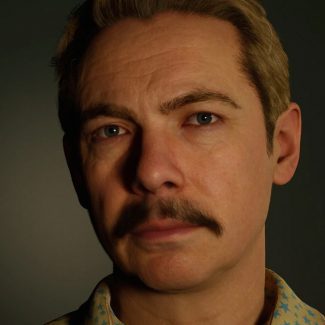

Epic Games, the company that makes Unreal Engine, recently released a substantial update to its MetaHuman character creation tool which for the first time allows developers to import scans of real people for use in real-time applications. The improvements glimpse a future where anyone can easily bring a realistic digital version of themselves into VR and the metaverse at large.

Epic’s MetaHuman tool is designed to make it easy for developers to create a wide variety of high quality 3D character models for use in real-time applications. The tool works like an advanced version of a ‘character customizer’ that you’d find in a modern videogame, except with a lot more control and fidelity.

On its initial release, developers were only able to start formulating their characters from a selection of preset faces, and then use tools from there to modify the character’s look to their taste. Naturally many experimented with trying to create their own likeness, or that of recognizable celebrities. Although MetaHuman character creation is lighting fast—compared to creating a comparable model manually from the ground up—achieving the likeness of a specific person remains challenging.

But now the latest release includes a new ‘Mesh to MetaHuman’ feature which allows developers to import face scans of real people (or 3D sculpts created in other software) and then have the system automatically generate a MetaHuman face based on the scan, including full rigging for animation.

There’s still some limitations, however. For one, hair, skin textures, and other details are not automatically generated; at this point the Mesh to MetaHuman feature is primarily focused on matching the overall topology of the head and segmenting it for realistic animations. Developers will still need to supply skin textures and do some additional work to match hair, facial hair, and eyes to the person they want to emulate.

The MetaHuman tool is still in early access and intended for developers of Unreal Engine. And while we’re not quite at the stage where anyone can simply snap a few photos of their head and generate a realistic digital version of themselves—it’s pretty clear that we’re heading in that direction.

However, if the goal is to create a completely believable avatar of ourselves for use in VR and the metaverse at large, there’s challenges still to be solved.

Simply generating a model that looks like you isn’t quite enough. You also need the model to move like you.

Every person has their own unique facial expressions and mannerisms which are easily identifiable by the people that know them well. Even if a face model is rigged for animation, unless it’s rigged in a way that’s specific to your expressions and able to draw from real examples of your expressions, a realistic avatar will never look quite like you when it’s in motion.

For people who don’t know you, that’s not too important because they don’t have a baseline of your expressions to draw from. But it would be important for your closest relationships, where even slight changes in a person’s usual facial expressions and mannerisms could implicate a range of conditions like being distracted, tired, or even drunk.

In an effort to address this specific challenge, Meta (not to be confused with Epic’s MetaHumans tool) has been working on its own system called Codec Avatars which aims to animate a realistic model of your face with completely believable animations that are unique to you—in real-time.

Perhaps in the future we’ll see a fusion of systems like MetaHumans and Codec Avatars; one to allow easy creation of a lifelike digital avatar and another to animate that avatar in a way that’s unique and believably you.

Source: Read Full Article